Hadoop course in Pune

Hadoop is an open source, Java-based programming framework that supports the processing and storage of extremely large data sets in a distributed computing environment. It is part of the Apache project sponsored by the Apache Software Foundation.

Pay Fees After Satisfaction With Interview Guidance

FAQ - Frequently Asked Questions

💬 Talk to Adviser

Get expert guidance from our experienced professionals in every field.

Skills & Tools You'll Learn

Programming Fundamentals

- Algorithms & Data Structures

- Problem Solving

- OOP Concepts

- Version Control (Git)

Development Tools

- IDEs & Text Editors

- CLI & Terminal

- Package Managers

- Build Tools

Software Practices

- Agile & Scrum

- Testing (Unit & Integration)

- Code Reviews

- CI/CD Workflows

Platforms & Technologies

- Databases (SQL/NoSQL)

- Cloud (AWS, GCP)

- APIs (REST & GraphQL)

- Docker & Kubernetes

Our Certifications

Gain globally recognized certifications that validate your skills and boost your career.

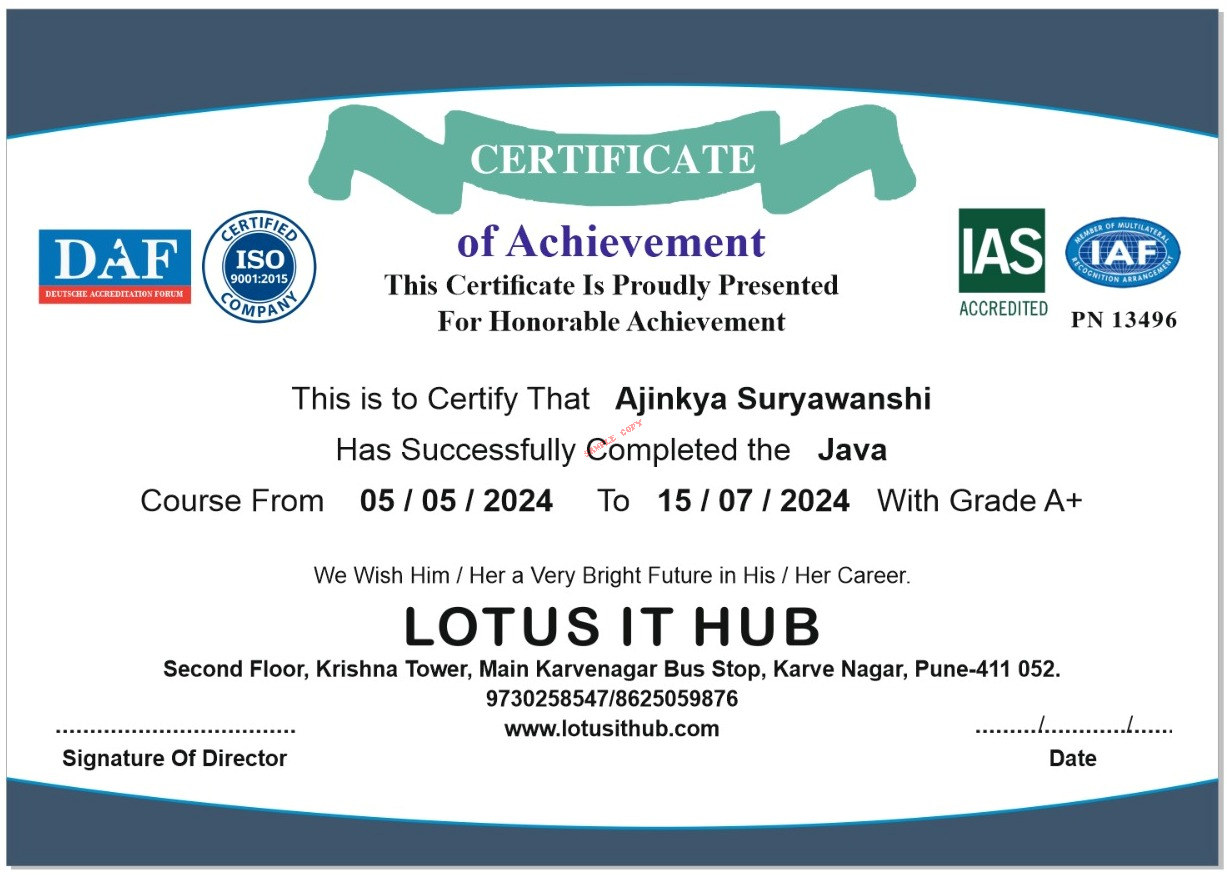

Earn Your Achievement Certificate

After completing your course, you’ll receive a verified certificate from Lotus IT Hub, showcasing your mastery of industry-relevant skills. This certificate can be shared on LinkedIn, added to your resume, and helps you stand out in the job market.

- Globally recognized certificate

- Verification ID for authenticity

- Downloadable digital format

- Shareable on LinkedIn & portfolios

Why Choose Lotus IT Hub

Empowering your career through expert training

Affordable & Customized Programs

Flexible pricing and learning plans

Hands-On Project Learning

Real-world practice to boost your skills

One-on-One Mentorship

Personalized guidance from industry experts